Installing and using Forklift 2.10

- About the Forklift

- Cold migration and warm migration in Forklift

- Warm migration precopy stage

- Preparing the cutover stage for a warm migration

- Migration speed comparison

- About cold and warm migration

- Cold migration

- Warm migration

- Precopy stage

- Cutover stage

- Advantages and disadvantages of cold and warm migrations

- Conclusions

- Power status of virtual machines during cold migrations

- Power status of virtual machines during warm migrations

- Advantages and disadvantages of cold and warm migrations

- Conclusions

- About live migration

- Prerequisites and software requirements for all providers

- Specific software requirements for each provider

- Installing and configuring the Forklift Operator

- Migrating virtual machines by using the OKD web console

- Migrating virtual machines from VMware vSphere

- Adding a VMware vSphere source provider

- Selecting a migration network for a VMware source provider

- Adding a KubeVirt destination provider

- Selecting a migration network for a KubeVirt provider

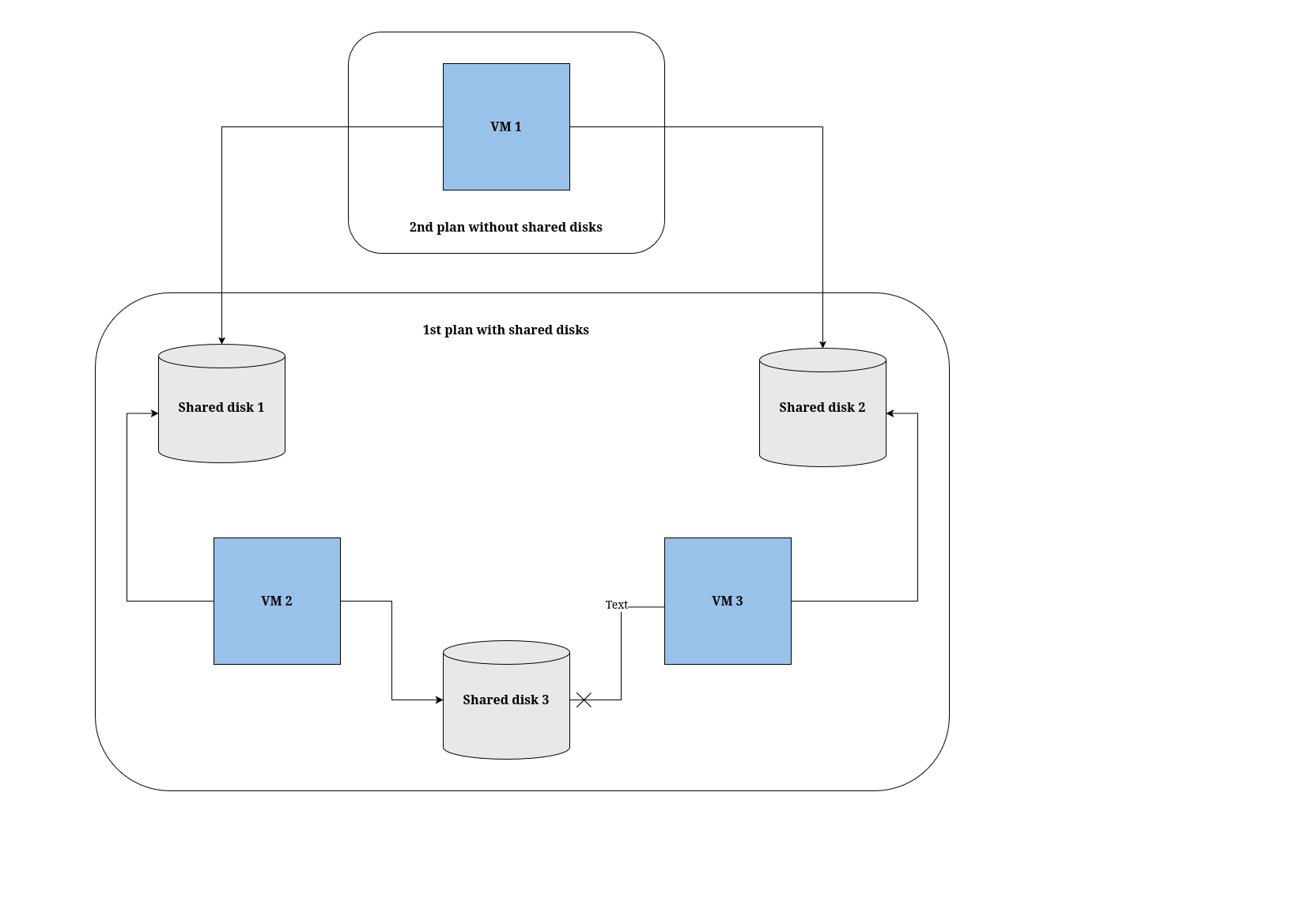

- Creating a VMware vSphere migration plan by using the MTV wizard

- Running a migration plan in the MTV UI

- Migration plan options

- About network maps in migration plans

- About storage maps in migration plans

- Canceling a migration

- Migrating virtual machines from oVirt

- Adding an oVirt source provider

- Adding a KubeVirt destination provider

- Selecting a migration network for a KubeVirt provider

- Creating a Red Hat Virtualization migration plan by using the MTV wizard

- Running a migration plan in the MTV UI

- Migration plan options

- About network maps in migration plans

- About storage maps in migration plans

- Canceling a migration

- Migrating virtual machines from OpenStack

- Adding an OpenStack source provider

- Adding a KubeVirt destination provider

- Selecting a migration network for a KubeVirt provider

- Creating an OpenStack migration plan by using the MTV wizard

- Running a migration plan in the MTV UI

- Migration plan options

- About network maps in migration plans

- About storage maps in migration plans

- Canceling a migration

- Migrating virtual machines from OVA

- Adding an Open Virtual Appliance (OVA) source provider

- Adding a KubeVirt destination provider

- Selecting a migration network for a KubeVirt provider

- Creating an Open Virtualization Appliance (OVA) migration plan by using the MTV wizard

- Running a migration plan in the MTV UI

- Migration plan options

- About network maps in migration plans

- About storage maps in migration plans

- Canceling a migration

- Migrating virtual machines from KubeVirt

- Adding a Red Hat KubeVirt source provider

- Adding a KubeVirt destination provider

- Selecting a migration network for a KubeVirt provider

- Creating an KubeVirt migration plan by using the MTV wizard

- Running a migration plan in the MTV UI

- Migration plan options

- About network maps in migration plans

- About storage maps in migration plans

- Canceling a migration

- Migrating virtual machines from the command line

- Permissions needed by non-administrators to work with migration plan components

- Running a VMware vSphere migration from the command-line

- Running a oVirt migration from the command-line

- Running an OpenStack migration from the command-line

- Running an Open Virtual Appliance (OVA) migration from the command-line

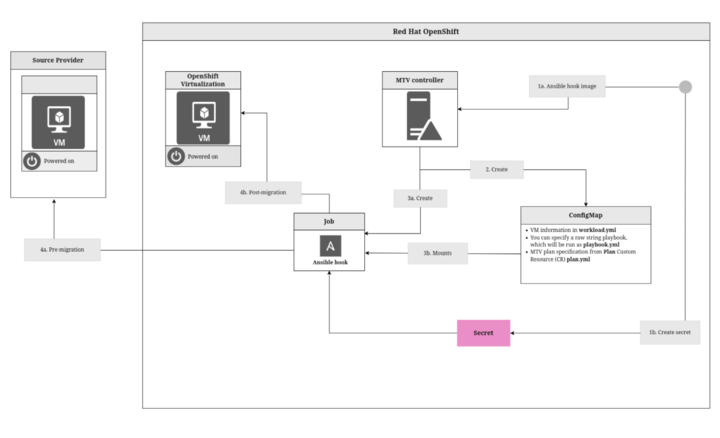

- Advanced migration options

- Upgrading Forklift

- Uninstalling Forklift

- Forklift performance recommendations

- Ensure fast storage and network speeds

- Ensure fast datastore read speeds to ensure efficient and performant migrations

- Endpoint types

- Set ESXi hosts BIOS profile and ESXi Host Power Management for High Performance

- Avoid additional network load on VMware networks

- Control maximum concurrent disk migrations per ESXi host

- Migrations are completed faster when migrating multiple VMs concurrently

- Migrations complete faster using multiple hosts

- Multiple migration plans compared to a single large migration plan

- Maximum values tested for cold migrations

- Warm migration recommendations

- Maximum values tested for warm migrations

- Recommendations for migrating VMs with large disks

- Increasing AIO sizes and buffer counts for NBD transport mode

- Troubleshooting

- Architecture

- Logs and custom resources

- Telemetry

- Additional information

About the Forklift

You can use the Forklift to migrate virtual machines (VMs) from the following source providers to KubeVirt destination providers:

-

VMware vSphere

-

oVirt

-

OpenStack

-

Open Virtual Appliances (OVAs) that were created by VMware vSphere

-

Remote KubeVirt clusters

Types of migration

Forklift supports three types of migration: cold, warm, and live.

Cold migration is available for all of the source providers listed above. This type of migration migrates VMs that are powered off and does not require common stored storage.

Warm migration is available only for VMware vSphere and oVirt. This type of migration migrates VMs that are powered on and does require common stored storage.

These two types of migration are discussed in detail in About cold and warm migration.

Live migration is available only for migrations between KubeVirt clusters or between namespaces on the same KubeVirt cluster. It requires Forklift version 2.10 or later and KubeVirt version 4.20 or later.

Live migration is discussed in detail in About live migration.

|

Live migration is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process. For more information about the support scope of Red Hat Technology Preview features, see https://access.redhat.com/support/offerings/techpreview/. |

Cold migration and warm migration in Forklift

Cold migration is when a powered off virtual machine (VM) is migrated to a separate host. The VM is powered off, and there is no need for common shared storage.

Warm migration is when a powered on VM is migrated to a separate host. A source host state is cloned to the destination host.

Warm migration precopy stage

-

Create an initial snapshot of running VM disks.

-

Copy first snapshot to target: full-disk transfer, the largest amount of data copied. It takes more time to complete.

-

Copy deltas: changed data, copying only data that has changed since the last snapshot was taken. It takes less time to complete.

-

Create a new snapshot.

-

Copy the delta between the previous snapshot and the new snapshot.

-

Schedule the next snapshot, configurable by default, one hour after the last snapshot finished.

-

-

An arbitrary number of deltas can be copied.

Preparing the cutover stage for a warm migration

-

Scheduled time to finalize warm migration.

-

Shut down the source VM.

-

Copy the final snapshot delta to the target.

-

Continue in the same way as cold migration.

-

Guest conversion.

-

Starting target VM (optional).

-

Migration speed comparison

If you compare the migration speeds of cold and warm migrations, you can observe that:

-

The observed speeds for the warm migration single disk transfer and disk conversion are approximately the same as for the cold migration.

-

The benefit of warm migration is that the transfer of the snapshot happens in the background while the VM is powered on.

-

The default snapshot time is taken every 60 minutes. If VMs change substantially, more data needs to be transferred than in cold migration when the VM is powered off.

-

The cutover time, meaning the shutdown of the VM and last snapshot transfer, depends on how much the VM has changed since the last snapshot.

|

Live migration reduces downtime even further than warm migration, but live migration is available only for migration between KubeVirt clusters or between namespaces on the same KubeVirt cluster. Therefore it is not included in the comparison above. |

About cold and warm migration

Forklift supports cold and warm migration as follows:

Forklift supports cold migration from the following source providers:

-

VMware vSphere

-

oVirt

-

OpenStack

-

Open Virtual Appliances (OVAs) that were created by VMware vSphere

-

Remote KubeVirt clusters

Forklift supports warm migration from the following source providers:

-

VMware vSphere

-

oVirt

Cold migration

Cold migration is the default migration type. The source virtual machines are shut down while the data is copied.

|

VMware only: In cold migrations, in situations in which a package manager cannot be used during the migration, Forklift does not install the To enable Forklift to automatically install If that is not possible, use your preferred automated or manual procedure to install |

Warm migration

Most of the data is copied during the precopy stage while the source virtual machines (VMs) are running.

Then the VMs are shut down and the remaining data is copied during the cutover stage.

Precopy stage

The VMs are not shut down during the precopy stage.

The VM disks are copied incrementally by using changed block tracking (CBT) snapshots. The snapshots are created at one-hour intervals by default. You can change the snapshot interval by updating the forklift-controller deployment.

|

You must enable CBT for each source VM and each VM disk. A VM can support up to 28 CBT snapshots. If the source VM has too many CBT snapshots and the |

The precopy stage runs until the cutover stage is started manually or is scheduled to start.

Cutover stage

The VMs are shut down during the cutover stage and the remaining data is migrated. Data stored in RAM is not migrated.

You can start the cutover stage manually by using the Forklift console or you can schedule a cutover time in the Migration manifest.

Advantages and disadvantages of cold and warm migrations

The table that follows offers a more detailed description of the advantages and disadvantages of cold migration and warm migration. It assumes that you have installed Red Hat Enterprise Linux (RHEL) 9 on the OKD platform on which you installed Forklift:

| Cold migration | Warm migration | |

|---|---|---|

Duration |

Correlates to the amount of data on the disks. Each block is copied once. |

Correlates to the amount of data on the disks and VM utilization. Blocks may be copied multiple times. |

Fail fast |

Convert and then transfer. Each VM is converted to be compatible with OKD and, if the conversion is successful, the VM is transferred. If a VM cannot be converted, the migration fails immediately. |

Transfer and then convert. For each VM, Forklift creates a snapshot and transfers it to OKD. When you start the cutover, Forklift creates the last snapshot, transfers it, and then converts the VM. |

Tools |

|

Containerized Data Importer (CDI), a persistent storage management add-on, and |

Data transferred |

Approximate sum of all disks |

Approximate sum of all disks and VM utilization |

VM downtime |

High: The VMs are shut down, and the disks are transferred. |

Low: Disks are transferred in the background. The VMs are shut down during the cutover stage, and the remaining data is migrated. Data stored in RAM is not migrated. |

Parallelism |

Disks are transferred sequentially for each VM. For remote migration to a destination that does not have Forklift installed, disks are transferred in parallel using CDI. |

Disks are transferred in parallel by different pods. |

Connection use |

Keeps the connection to the Source only during the disk transfer. |

Keeps the connection to the Source during the disk transfer, but the connection is released between snapshots. |

Tools |

Forklift only. |

Forklift and CDI from KubeVirt. |

|

The preceding table describes the situation for VMs that are running because the main benefit of warm migration is the reduced downtime, and there is no reason to initiate warm migration for VMs that are down. However, performing warm migration for VMs that are down is not the same as cold migration, even when Forklift uses |

|

When importing from VMware, there are additional factors which impact the migration speed such as limits related to ESXi, vSphere. or VDDK. |

Conclusions

Based on the preceding information, we can draw the following conclusions about cold migration vs. warm migration:

-

The shortest downtime of VMs can be achieved by using warm migration.

-

The shortest duration for VMs with a large amount of data on a single disk can be achieved by using cold migration.

-

The shortest duration for VMs with a large amount of data that is spread evenly across multiple disks can be achieved by using warm migration.

Power status of virtual machines during cold migrations

Cold migration is the default migration type. During cold migrations, the source virtual machines are shut down while the data is copied.

|

VMware only: In cold migrations, in situations in which a package manager cannot be used during the migration, Forklift does not install the To enable Forklift to automatically install If that is not possible, use your preferred automated or manual procedure to install |

Power status of virtual machines during warm migrations

During warm migrations, most of the data is copied during the precopy stage while the source virtual machines (VMs) are running.

Then the VMs are shut down, and the remaining data is copied during the cutover stage.

Precopy stage

The VMs are not shut down during the precopy stage.

The VM disks are copied incrementally by using changed block tracking (CBT) snapshots. The snapshots are created at one-hour intervals by default. You can change the snapshot interval by updating the forklift-controller deployment.

|

You must enable CBT for each source VM and each VM disk. A VM can support up to 28 CBT snapshots. If the source VM has too many CBT snapshots and the |

The precopy stage runs until the cutover stage is started manually or is scheduled to start.

Cutover stage

The VMs are shut down during the cutover stage, and the remaining data is migrated. Data stored in RAM is not migrated.

You can start the cutover stage manually by using the Forklift console, or you can schedule a cutover time in the Migration manifest.

Advantages and disadvantages of cold and warm migrations

The table that follows offers a more detailed description of the advantages and disadvantages of cold migration and warm migration. It assumes that you have installed Red Hat Enterprise Linux (RHEL) 9 on the OKD platform on which you installed Forklift:

| Cold migration | Warm migration | |

|---|---|---|

Duration |

Correlates to the amount of data on the disks. Each block is copied once. |

Correlates to the amount of data on the disks and VM utilization. Blocks may be copied multiple times. |

Fail fast |

Convert and then transfer. Each VM is converted to be compatible with OKD and, if the conversion is successful, the VM is transferred. If a VM cannot be converted, the migration fails immediately. |

Transfer and then convert. For each VM, Forklift creates a snapshot and transfers it to OKD. When you start the cutover, Forklift creates the last snapshot, transfers it, and then converts the VM. |

Tools |

|

Containerized Data Importer (CDI), a persistent storage management add-on, and |

Data transferred |

Approximate sum of all disks |

Approximate sum of all disks and VM utilization |

VM downtime |

High: The VMs are shut down, and the disks are transferred. |

Low: Disks are transferred in the background. The VMs are shut down during the cutover stage, and the remaining data is migrated. Data stored in RAM is not migrated. |

Parallelism |

Disks are transferred sequentially for each VM. For remote migration to a destination that does not have Forklift installed, disks are transferred in parallel using CDI. |

Disks are transferred in parallel by different pods. |

Connection use |

Keeps the connection to the Source only during the disk transfer. |

Keeps the connection to the Source during the disk transfer, but the connection is released between snapshots. |

Tools |

Forklift only. |

Forklift and CDI from KubeVirt. |

|

The preceding table describes the situation for VMs that are running because the main benefit of warm migration is the reduced downtime, and there is no reason to initiate warm migration for VMs that are down. However, performing warm migration for VMs that are down is not the same as cold migration, even when Forklift uses |

|

When importing from VMware, there are additional factors which impact the migration speed such as limits related to ESXi, vSphere, or VDDK. |

Conclusions

Based on the preceding information, the following conclusions can be drawn about cold migration vs. warm migration:

-

The shortest downtime of VMs can be achieved by using warm migration.

-

The shortest duration for VMs with a large amount of data on a single disk can be achieved by using cold migration.

-

The shortest duration for VMs with a large amount of data that is spread evenly across multiple disks can be achieved by using warm migration.

About live migration

You can use live migration to migrate virtual machines (VMs) between KubeVirt clusters, or between namespaces on the same KubeVirt cluster, with extremely limited downtime.

Live migration is supported by Forklift version 2.10.0 and later. It requires KubeVirt 4.20 or later on both your source and target clusters.

Live migration makes it easier for you to perform Day 2 tasks, such as seamless maintenance and workload balancing after you have migrated your VMs to KubeVirt.

Benefits of live migration

The major advantage of live migration is that it significantly reduces the amount of downtime needed to perform migrations. As a result, you can perform migrations with minimal service interruption. This allows your end-users to continue using critical applications during migrations.

Live migration also gives you the following benefits:

-

Additional migration functionality: Live migration supports migrating virtual machines (VMs) between KubeVirt clusters and between namespaces on the same KubeVirt clusters, making Day 2 operations easier and safer to perform.

-

Improved service continuity: Live migration lets you quickly migrate VMs from one cluster to another, allowing you to eliminate the need for scheduled downtime during cluster maintenance or upgrades. This allows you to provide more consistent and reliable services.

-

Greater operational flexibility: Live migration allows your IT team to manage your infrastructure dynamically without harming business operations. Your team can respond to changing demands or perform necessary maintenance without complex, disruptive procedures.

-

Enhanced performance and scalability: Live migration gives you the ability to balance workloads across clusters. This helps ensure that applications have the resources they need, leading to better overall system performance and scalability.

Live migration, Forklift, and KubeVirt

Live migration is a joint operation between Forklift and KubeVirt that allows you to leverage the strengths of Forklift when you migrate virtual machines (VMs) from one KubeVirt cluster to another. Tasks and responsibilities are divided between the two as follows:

-

Forklift handles the high-level orchestration that is needed to perform a live migration of KubeVirt Kubevirt VMs from one cluster to another.

-

KubeVirt is responsible for the low-level migration mechanics, such as the actual state and storage transfer between the clusters.

Orchestration is done by the ForkliftController component of Forklift, rather than by KubeVirt, because ForkliftController is already designed to manage the migration pipeline, which includes the following responsibilities:

-

Build an inventory of source resources and map them to the destination cluster.

-

Create and run the migration plan.

-

Ensure that all necessary shared resources, such as instance types, SSH keys, secrets, and config maps, are available and accessible on the destination cluster.

Limitations of live migration

Live migration lets you migrate virtual machines (VMs) between KubeVirt clusters or between namespaces on the same KubeVirt cluster with a minimum of downtime, but the feature does have the following limitations:

-

Live migration is available only for migrations between KubeVirt clusters or between namespaces on the same KubeVirt cluster. It is not available for any other source provider, whether the provider is supported by Forklift or not.

-

Live migration does not establish connectivity between KubeVirt clusters. Establishing such connectivity is the responsibility of the cluster administrator.

-

Live migration does not migrate resources unrelated to VMs, such as services, routes, or other application components, that may be necessary for application availability after a migration.

Live migration workflow

Live migration uses a different workflow than other types of migration. You can use the following workflow to understand how Forklift orchestrates a live migration with KubeVirt handling the low-level mechanics of the migration. This workflow is also designed to help you troubleshoot problems that might occur during a live migration.

-

Start: When you click Start plan, Forklift initiates the migration plan.

-

PreHook: If you added a pre-migration hook, Forklift runs it now.

-

Create empty

DataVolumes: Forklift creates empty targetDataVolumesin the target KubeVirt cluster. KubeVirt usesKubeVirtto handle the actual storage migration. -

Ensure resources: Forklift copies all secrets or config maps that are mounted by a source VM to the target namespace.

-

Create target VMs: Forklift creates target VMs in a running state and creates a

VirtualMachineInstanceMigrationresource on each cluster. The VMs have a specialKubeVirtannotation indicating to start them in migration target mode. -

Wait for state transfer: Forklift waits for

KubeVirtto handle the state transfer and for the destination VMs to report as ready.KubeVirtalso handles the shutdown of the source VMs after the state transfer. -

PostHook: If you added a post-migration hook, Forklift runs it now.

-

Completed: Forklift indicates that the migration is finished.

Prerequisites and software requirements for all providers

Review the following prerequisites and software requirements to ensure that your environment is prepared for migration.

You must install compatible versions of OKD and KubeVirt.

Storage support and default modes

Forklift uses the following default volume and access modes for supported storage.

| Provisioner | Volume mode | Access mode |

|---|---|---|

kubernetes.io/aws-ebs |

Block |

ReadWriteOnce |

kubernetes.io/azure-disk |

Block |

ReadWriteOnce |

kubernetes.io/azure-file |

Filesystem |

ReadWriteMany |

kubernetes.io/cinder |

Block |

ReadWriteOnce |

kubernetes.io/gce-pd |

Block |

ReadWriteOnce |

kubernetes.io/hostpath-provisioner |

Filesystem |

ReadWriteOnce |

manila.csi.openstack.org |

Filesystem |

ReadWriteMany |

openshift-storage.cephfs.csi.ceph.com |

Filesystem |

ReadWriteMany |

openshift-storage.rbd.csi.ceph.com |

Block |

ReadWriteOnce |

kubernetes.io/rbd |

Block |

ReadWriteOnce |

kubernetes.io/vsphere-volume |

Block |

ReadWriteOnce |

|

If the KubeVirt storage does not support dynamic provisioning, you must apply the following settings:

See Enabling a statically-provisioned storage class for details on editing the storage profile. |

|

If your migration uses block storage and persistent volumes created with an EXT4 file system, increase the file system overhead in the Containerized Data Importer (CDI) to be more than 10%. The default overhead that is assumed by CDI does not completely include the reserved place for the root partition. If you do not increase the file system overhead in CDI by this amount, your migration might fail. |

|

When you migrate from OpenStack, or when you run a cold migration from oVirt to the OKD cluster that Forklift is deployed on, the migration allocates persistent volumes without CDI. In these cases, you might need to adjust the file system overhead. If the configured file system overhead, which has a default value of 10%, is too low, the disk transfer will fail due to lack of space. In such a case, you would want to increase the file system overhead. In some cases, however, you might want to decrease the file system overhead to reduce storage consumption. You can change the file system overhead by changing the value of the |

Network prerequisites

The following network prerequisites apply to all migrations:

-

Do not change IP addresses, VLANs, and other network configuration settings during a migration. The MAC addresses of the virtual machines (VMs) are preserved during migration.

-

The network connections between the source environment, the KubeVirt cluster, and the replication repository must be reliable and uninterrupted.

-

If you are mapping more than one source and destination network, you must create a network attachment definition for each additional destination network.

Ports

The firewalls must enable traffic over the following ports:

| Port | Protocol | Source | Destination | Purpose |

|---|---|---|---|---|

443 |

TCP |

OKD nodes |

VMware vCenter |

VMware provider inventory Disk transfer authentication |

443 |

TCP |

OKD nodes |

VMware ESXi hosts |

Disk transfer authentication |

902 |

TCP |

OKD nodes |

VMware ESXi hosts |

Disk transfer data copy |

| Port | Protocol | Source | Destination | Purpose |

|---|---|---|---|---|

443 |

TCP |

OKD nodes |

oVirt Engine |

oVirt provider inventory Disk transfer authentication |

54322 |

TCP |

OKD nodes |

oVirt hosts |

Disk transfer data copy |

| Port | Protocol | Source | Destination | Purpose |

|---|---|---|---|---|

8776 |

Cinder |

OKD nodes |

OpenStack hosts |

Block storage API |

8774 |

Nova |

OKD nodes |

OpenStack hosts |

Virtualization API |

5000 |

Keystone |

OKD nodes |

OpenStack hosts |

Authentication API |

9696 |

Neutron |

OKD nodes |

OpenStack hosts |

Network API |

9292 |

Glance |

OKD nodes |

OpenStack hosts |

Image service API |

| Port | Protocol | Source | Destination | Purpose |

|---|---|---|---|---|

2049 |

TCP |

OKD nodes |

Server containing the OVA files |

NFS service |

111 |

TCP or UCP |

OKD nodes |

Server containing the OVA files |

RPC Portmapper, only needed for NFSv4.0 |

| Port | Protocol | Source | Destination | Purpose |

|---|---|---|---|---|

6443 |

API |

OKD nodes |

KubeVirt host |

Access API to get information from a VM’s manifest |

443 |

TCP |

OKD nodes |

KubeVirt host |

Download VM data using the |

Source virtual machine prerequisites

The following prerequisites for source virtual machines (VMs) apply to all migrations:

-

ISO images and CD-ROMs are unmounted.

-

Each NIC contains either an IPv4 address or an IPv6 address, although a NIC may use both.

-

The operating system of each VM is certified and supported as a guest operating system for conversions.

|

You can check that the operating system is supported by referring to the table in Converting virtual machines from other hypervisors to KVM with virt-v2v. See the columns of the table that refer to RHEL 8 hosts and RHEL 9 hosts. |

-

VMs that you want to migrate with MTV 2.6.z run on RHEL 8.

-

VMs that you want to migrate with MTV 2.7.z run on RHEL 9.

-

The name of a VM must not contain a period (

.). Forklift changes any period in a VM name to a dash (-). -

The name of a VM must not be the same as any other VM in the KubeVirt environment.

Forklift has limited support for the migration of dual-boot operating system VMs.

In the case of a dual-boot operating system VM, Forklift will try to convert the first boot disk it finds. Alternatively the root device can be specified in the Forklift UI.

For virtual machines (VMs) running Microsoft Windows, Volume Shadow Copy Service (VSS) inside the guest VM is used to quiesce the file system and applications.

When performing a warm migration of a Microsoft Windows virtual machine from VMware, you must start VSS on the Windows guest operating system in order for the snapshot and

Quiesce guest file systemto succeed.If you do not start VSS on the Windows guest operating system, the snapshot creation during the Warm migration fails with the following error:

An error occurred while taking a snapshot: Failed to restart the virtual machineIf you set the VSS service to

Manualand start a snapshot creation withQuiesce guest file system = yes. In the background, the VMware Snapshot provider service requests VSS to start the shadow copy.Forklift automatically assigns a new name to a VM that does not comply with the rules.

Forklift makes the following changes when it automatically generates a new VM name:

-

Excluded characters are removed.

-

Uppercase letters are switched to lowercase letters.

-

Any underscore (

_) is changed to a dash (-).

This feature allows a migration to proceed smoothly even if someone enters a VM name that does not follow the rules.

Microsoft Windows VMs, which use the Measured Boot feature, cannot be migrated. Measured Boot is a mechanism to prevent any kind of device changes by checking each start-up component, including the firmware, all the way to the boot driver.

The alternative to migration is to re-create the Windows VM directly on KubeVirt.

Virtual machines (VMs) with Secure Boot enabled currently might not be migrated automatically. This is because Secure Boot would prevent the VMs from booting on the destination provider. Secure boot is a security standard developed by members of the PC industry to ensure that a device boots using only software that is trusted by the Original Equipment Manufacturer (OEM).

Workaround: The current workaround is to disable Secure Boot on the destination. For more details, see Disabling Secure Boot. (MTV-1548)

-

Forklift encryption support

Forklift supports the following types of encryption for virtual machines (VMs):

-

For VMs that run on Linux: Linux Unified Key Setup (LUKS)

-

For VMs that run on Windows: BitLocker

Specific software requirements for each provider

In addition to the prerequisites and software requirements that apply to all providers, there are specific requirements for each provider.

VMware prerequisites

It is strongly recommended to create a VDDK image to accelerate migrations. For more information, see Creating a VDDK image.

|

Virtual machine (VM) migrations do not work without VDDK when a VM is backed by VMware vSAN. |

The following prerequisites apply to VMware migrations:

-

You must use a compatible version of VMware vSphere.

-

You must be logged in as a user with at least the minimal set of VMware privileges.

-

To access the virtual machine using a pre-migration hook, VMware Tools must be installed on the source virtual machine.

-

The VM operating system must be certified and supported for use as a guest operating system with KubeVirt and for conversion to KVM with

virt-v2v. -

If you are running a warm migration, you must enable changed block tracking (CBT) on the VMs and on the VM disks.

-

If you are migrating more than 10 VMs from an ESXi host in the same migration plan, you must increase the Network File Copy (NFC) service memory of the host.

-

It is strongly recommended to disable hibernation because Forklift does not support migrating hibernated VMs.

|

For virtual machines (VMs) running Microsoft Windows, Volume Shadow Copy Service (VSS) inside the guest VM is used to quiesce the file system and applications. When performing a warm migration of a Microsoft Windows virtual machine from VMware, you must start VSS on the Windows guest operating system in order for the snapshot and If you do not start VSS on the Windows guest operating system, the snapshot creation during the Warm migration fails with the following error: If you set the VSS service to |

|

In case of a power outage, data might be lost for a VM with disabled hibernation. However, if hibernation is not disabled, migration will fail. |

|

Neither Forklift nor OpenShift Virtualization support conversion of Btrfs for migrating VMs from VMware. |

VMware privileges

The following minimal set of VMware privileges is required to migrate virtual machines to KubeVirt with the Forklift.

| Privilege | Description | ||

|---|---|---|---|

|

|||

|

Allows powering off a powered-on virtual machine. This operation powers down the guest operating system. |

||

|

Allows powering on a powered-off virtual machine and resuming a suspended virtual machine. |

||

|

Allows managing a virtual machine by the VMware Virtual Infrastructure eXtension (VIX) API. |

||

|

|||

|

Allows opening a disk on a virtual machine for random read and write access. Used mostly for remote disk mounting. |

||

|

Allows operations on files associated with a virtual machine, including VMX, disks, logs, and NVRAM. |

||

|

Allows opening a disk on a virtual machine for random read access. Used mostly for remote disk mounting. |

||

|

Allows read operations on files associated with a virtual machine, including VMX, disks, logs, and NVRAM. |

||

|

Allows write operations on files associated with a virtual machine, including VMX, disks, logs, and NVRAM. |

||

|

Allows cloning of a template. |

||

|

Allows cloning of an existing virtual machine and allocation of resources. |

||

|

Allows creation of a new template from a virtual machine. |

||

|

Allows customization of a virtual machine’s guest operating system without moving the virtual machine. |

||

|

Allows deployment of a virtual machine from a template. |

||

|

Allows marking an existing powered-off virtual machine as a template. |

||

|

Allows marking an existing template as a virtual machine. |

||

|

Allows creation, modification, or deletion of customization specifications. |

||

|

Allows promote operations on a virtual machine’s disks. |

||

|

Allows reading a customization specification. |

||

|

|||

|

Allows creation of a snapshot from the virtual machine’s current state. |

||

|

Allows removal of a snapshot from the snapshot history. |

||

|

|||

|

Allows exploring the contents of a datastore. |

||

|

Allows performing low-level file operations - read, write, delete, and rename - in a datastore. |

||

|

|||

|

Allows verification of the validity of a session. |

||

|

|||

|

Allows decryption of an encrypted virtual machine. |

||

|

Allows access to encrypted resources. |

||

|

Create a role in VMware with the permissions described in the preceding table and then apply this role to the Inventory section, as described in Creating a VMware role to apply Forklift permissions. |

Creating a VMware role to grant MTV privileges

You can create a role in VMware to grant privileges for Forklift and then grant those privileges to users with that role.

The procedure that follows explains how to do this in general. For detailed instructions, see VMware documentation.

-

In the vCenter Server UI, create a role that includes the set of privileges described in the table in VMware prerequisites.

-

In the vSphere inventory UI, grant privileges for users with this role to the appropriate vSphere logical objects at one of the following levels:

-

At the user or group level: Assign privileges to the appropriate logical objects in the data center and use the Propagate to child objects option.

-

At the object level: Apply the same role individually to all the relevant vSphere logical objects involved in the migration, for example, hosts, vSphere clusters, data centers, or networks.

-

Creating a VDDK image

It is strongly recommended that Forklift should be used with the VMware Virtual Disk Development Kit (VDDK) SDK when transferring virtual disks from VMware vSphere.

|

Creating a VDDK image, although optional, is highly recommended. Using Forklift without VDDK is not recommended and could result in significantly lower migration speeds. |

To make use of this feature, you download the VDDK, build a VDDK image, and push the VDDK image to your image registry.

The VDDK package contains symbolic links, therefore, the procedure of creating a VDDK image must be performed on a file system that preserves symbolic links (symlinks).

|

Storing the VDDK image in a public registry might violate the VMware license terms. |

-

podmaninstalled. -

You are working on a file system that preserves symbolic links (symlinks).

-

If you are using an external registry, KubeVirt must be able to access it.

-

Create and navigate to a temporary directory:

$ mkdir /tmp/<dir_name> && cd /tmp/<dir_name> -

In a browser, navigate to the VMware VDDK version 8 download page.

-

Select version 8.0.1 and click Download.

|

In order to migrate to KubeVirt 4.12, download VDDK version 7.0.3.2 from the VMware VDDK version 7 download page. |

-

Save the VDDK archive file in the temporary directory.

-

Extract the VDDK archive:

$ tar -xzf VMware-vix-disklib-<version>.x86_64.tar.gz -

Create a

Dockerfile:$ cat > Dockerfile <<EOF FROM registry.access.redhat.com/ubi8/ubi-minimal USER 1001 COPY vmware-vix-disklib-distrib /vmware-vix-disklib-distrib RUN mkdir -p /opt ENTRYPOINT ["cp", "-r", "/vmware-vix-disklib-distrib", "/opt"] EOF -

Build the VDDK image:

$ podman build . -t <registry_route_or_server_path>/vddk:<tag> -

Push the VDDK image to the registry:

$ podman push <registry_route_or_server_path>/vddk:<tag> -

Ensure that the image is accessible to your KubeVirt environment.

Increasing the NFC service memory of an ESXi host

If you are migrating more than 10 VMs from an ESXi host in the same migration plan, you must increase the Network File copy (NFC) service memory of the host. Otherwise, the migration fails because the NFC service memory is limited to 10 parallel connections.

-

Log in to the ESXi host as root.

-

Change the value of

maxMemoryto1000000000in/etc/vmware/hostd/config.xml:... <nfcsvc> <path>libnfcsvc.so</path> <enabled>true</enabled> <maxMemory>1000000000</maxMemory> <maxStreamMemory>10485760</maxStreamMemory> </nfcsvc> ... -

Restart

hostd:# /etc/init.d/hostd restartYou do not need to reboot the host.

VDDK validator containers need requests and limits

If you have the cluster or project resource quotas set, you must ensure that you have a sufficient quota for the Forklift pods to perform the migration.

You can see the defaults, which you can override in the ForkliftController custom resource (CR), listed as follows. If necessary, you can adjust these defaults.

These settings are highly dependent on your environment. If there are many migrations happening at once and the quotas are not set enough for the migrations, then the migrations can fail. This can also be correlated to the MAX_VM_INFLIGHT setting that determines how many VMs/disks are migrated at once.

The following defaults can be overriden in the ForkliftController CR:

-

Defaults that affect both cold and warm migrations:

For cold migration, it is likely to be more resource intensive as it performs the disk copy. For warm migration, you could potentially reduce the requests.

-

virt_v2v_container_limits_cpu:

4000m -

virt_v2v_container_limits_memory:

8Gi -

virt_v2v_container_requests_cpu:

1000m -

virt_v2v_container_requests_memory:

1GiCold and warm migration using

virt-v2vcan be resource-intensive. For more details, see Compute power and RAM.

-

-

Defaults that affect any migrations with hooks:

-

hooks_container_limits_cpu:

1000m -

hooks_container_limits_memory:

1Gi -

hooks_container_requests_cpu:

100m -

hooks_container_requests_memory:

150Mi

-

-

Defaults that affect any OVA migrations:

-

ova_container_limits_cpu:

1000m -

ova_container_limits_memory:

1Gi -

ova_container_requests_cpu:

100m -

ova_container_requests_memory:

150Mi

-

oVirt prerequisites

The following prerequisites apply to oVirt migrations:

-

To create a source provider, you must have at least the

UserRoleandReadOnlyAdminroles assigned to you. These are the minimum required permissions, however, any other administrator or superuser permissions will also work.

|

You must keep the |

-

To migrate virtual machines:

-

You must have one of the following:

-

oVirt admin permissions. These permissions allow you to migrate any virtual machine in the system.

-

DiskCreatorandUserVmManagerpermissions on every virtual machine you want to migrate.

-

-

You must use a compatible version of oVirt.

-

You must have the Engine CA certificate, unless it was replaced by a third-party certificate, in which case, specify the Engine Apache CA certificate.

You can obtain the Engine CA certificate by navigating to https://<engine_host>/ovirt-engine/services/pki-resource?resource=ca-certificate&format=X509-PEM-CA in a browser.

-

If you are migrating a virtual machine with a direct logical unit number (LUN) disk, ensure that the nodes in the KubeVirt destination cluster that the VM is expected to run on can access the backend storage.

-

|

OpenStack prerequisites

The following prerequisites apply to OpenStack migrations:

-

You must use a compatible version of OpenStack.

Additional authentication methods for migrations with OpenStack source providers

Forklift supports the following authentication methods for migrations with OpenStack source providers in addition to the standard username and password credential set:

-

Token authentication

-

Application credential authentication

You can use these methods to migrate virtual machines with OpenStack source providers using the command-line interface (CLI) the same way you migrate other virtual machines, except for how you prepare the Secret manifest.

Using token authentication with an OpenStack source provider

You can use token authentication, instead of username and password authentication, when you create an OpenStack source provider.

Forklift supports both of the following types of token authentication:

-

Token with user ID

-

Token with user name

For each type of token authentication, you need to use data from OpenStack to create a Secret manifest.

Have an OpenStack account.

-

In the dashboard of the OpenStack web console, click Project > API Access.

-

Expand Download OpenStack RC file and click OpenStack RC file.

The file that is downloaded, referred to here as

<openstack_rc_file>, includes the following fields used for token authentication:OS_AUTH_URL OS_PROJECT_ID OS_PROJECT_NAME OS_DOMAIN_NAME OS_USERNAME -

To get the data needed for token authentication, run the following command:

$ openstack token issueThe output, referred to here as

<openstack_token_output>, includes thetoken,userID, andprojectIDthat you need for authentication using a token with user ID. -

Create a

Secretmanifest similar to the following:-

For authentication using a token with user ID:

cat << EOF | oc apply -f - apiVersion: v1 kind: Secret metadata: name: openstack-secret-tokenid namespace: openshift-mtv labels: createdForProviderType: openstack type: Opaque stringData: authType: token token: <token_from_openstack_token_output> projectID: <projectID_from_openstack_token_output> userID: <userID_from_openstack_token_output> url: <OS_AUTH_URL_from_openstack_rc_file> EOF -

For authentication using a token with user name:

cat << EOF | oc apply -f - apiVersion: v1 kind: Secret metadata: name: openstack-secret-tokenname namespace: openshift-mtv labels: createdForProviderType: openstack type: Opaque stringData: authType: token token: <token_from_openstack_token_output> domainName: <OS_DOMAIN_NAME_from_openstack_rc_file> projectName: <OS_PROJECT_NAME_from_openstack_rc_file> username: <OS_USERNAME_from_openstack_rc_file> url: <OS_AUTH_URL_from_openstack_rc_file> EOF

-

Using application credential authentication with an OpenStack source provider

You can use application credential authentication, instead of username and password authentication, when you create an OpenStack source provider.

Forklift supports both of the following types of application credential authentication:

-

Application credential ID

-

Application credential name

For each type of application credential authentication, you need to use data from OpenStack to create a Secret manifest.

You have an OpenStack account.

-

In the dashboard of the OpenStack web console, click Project > API Access.

-

Expand Download OpenStack RC file and click OpenStack RC file.

The file that is downloaded, referred to here as

<openstack_rc_file>, includes the following fields used for application credential authentication:OS_AUTH_URL OS_PROJECT_ID OS_PROJECT_NAME OS_DOMAIN_NAME OS_USERNAME -

To get the data needed for application credential authentication, run the following command:

$ openstack application credential create --role member --role reader --secret redhat forkliftThe output, referred to here as

<openstack_credential_output>, includes:-

The

idandsecretthat you need for authentication using an application credential ID -

The

nameandsecretthat you need for authentication using an application credential name

-

-

Create a

Secretmanifest similar to the following:-

For authentication using the application credential ID:

cat << EOF | oc apply -f - apiVersion: v1 kind: Secret metadata: name: openstack-secret-appid namespace: openshift-mtv labels: createdForProviderType: openstack type: Opaque stringData: authType: applicationcredential applicationCredentialID: <id_from_openstack_credential_output> applicationCredentialSecret: <secret_from_openstack_credential_output> url: <OS_AUTH_URL_from_openstack_rc_file> EOF -

For authentication using the application credential name:

cat << EOF | oc apply -f - apiVersion: v1 kind: Secret metadata: name: openstack-secret-appname namespace: openshift-mtv labels: createdForProviderType: openstack type: Opaque stringData: authType: applicationcredential applicationCredentialName: <name_from_openstack_credential_output> applicationCredentialSecret: <secret_from_openstack_credential_output> domainName: <OS_DOMAIN_NAME_from_openstack_rc_file> username: <OS_USERNAME_from_openstack_rc_file> url: <OS_AUTH_URL_from_openstack_rc_file> EOF

-

Open Virtual Appliance (OVA) prerequisites

The following prerequisites apply to Open Virtual Appliance (OVA) file migrations:

-

All OVA files are created by VMware vSphere.

|

Migration of OVA files that were not created by VMware vSphere but are compatible with vSphere might succeed. However, migration of such files is not supported by Forklift. Forklift supports only OVA files created by VMware vSphere. |

-

The OVA files are in one or more folders under an NFS shared directory in one of the following structures:

-

In one or more compressed Open Virtualization Format (OVF) packages that hold all the VM information.

The filename of each compressed package must have the

.ovaextension. Several compressed packages can be stored in the same folder.When this structure is used, Forklift scans the root folder and the first-level subfolders for compressed packages.

For example, if the NFS share is

/nfs, then:-

The folder

/nfsis scanned. -

The folder

/nfs/subfolder1is scanned. -

However,

/nfs/subfolder1/subfolder2is not scanned.

-

-

In extracted OVF packages.

When this structure is used, Forklift scans the root folder, first-level subfolders, and second-level subfolders for extracted OVF packages.

However, there can be only one

.ovffile in a folder. Otherwise, the migration will fail.For example, if the NFS share is

/nfs, then:-

The OVF file

/nfs/vm.ovfis scanned. -

The OVF file

/nfs/subfolder1/vm.ovfis scanned. -

The OVF file

/nfs/subfolder1/subfolder2/vm.ovfis scanned. -

However, the OVF file

/nfs/subfolder1/subfolder2/subfolder3/vm.ovfis not scanned.

-

-

KubeVirt prerequisites

The following prerequisites apply to migrations from one KubeVirt cluster to another:

-

Both the source and destination KubeVirt clusters must have the same version of Forklift installed.

-

The source cluster must use KubeVirt 4.16 or later.

-

Migration from a later version of KubeVirt to an earlier one is not supported.

-

Migration from an earlier version of KubeVirt to a later version is supported if both are supported by the current version of Forklift. For example, if the current version of KubeVirt is 4.18, a migration from version 4.16 or 4.17 to version 4.18 is supported, but a migration from version 4.15 to any version is not.

|

It is strongly recommended to migrate only between clusters with the same version of KubeVirt, although migration from an earlier version of KubeVirt to a later one is supported. |

KubeVirt live migration prerequisites

In addition to the regular virt} prerequisites, live migration has the following additional prerequisites:

-

Forklift 2.10.0 or later installed. For more information, see Upgrading the Forklift. Forklift treats all KubeVirt migrations run on Forklift 2.9 or earlier as cold migrations, even if they are configured as live migrations.

-

KubeVirt 4.20.0 or later installed on both source and target clusters.

-

In the Forklift Operator, in the

specportion of theforklift-controllerYAML,feature_ocp_live_migrationis set totrue. You must havecluster-adminprivileges to set this field. -

In the

KubeVirtresource of both clusters in thefeatureGatesof the YAML,DecntralizedLiveMigrationis listed. You must havecluster-adminprivileges to set this field. -

Connectivity between the clusters must be established, including connectivity for state transfer. Technologies such as Submariner can be used for this purpose.

-

The target cluster has

VirtualMachineInstanceTypesandVirtualMachinePreferencesthat match those used by the VMs on the source cluster.

Software compatibility guidelines

You must install compatible software versions. The table that follows lists the relevant software versions for this version of Forklift.

| Forklift | OKD | KubeVirt | VMware vSphere | oVirt | OpenStack |

|---|---|---|---|---|---|

2.10 |

4.20, 4.19, 4.18 |

4.20, 4.19, 4.18 |

6.5 or later |

4.4 SP1 or later |

16.1 or later |

|

Migration from oVirt 4.3 Forklift was tested only with oVirt 4.4 SP1. Migration from oVirt (oVirt) 4.3 has not been tested with Forklift 2.10. While not supported, basic migrations from oVirt 4.3 are expected to work. Generally it is advised to upgrade oVirt Manager to the previously mentioned supported version before the migration to KubeVirt. Therefore, it is recommended to upgrade oVirt to the supported version above before the migration to KubeVirt. However, migrations from oVirt 4.3.11 were tested with Forklift 2.3, and might work in practice in many environments using Forklift 2.10. In this case, it is recommended to upgrade oVirt Manager to the previously mentioned supported version before the migration to KubeVirt. |

OpenShift Operator Life Cycles

For more information about the software maintenance Life Cycle classifications for Operators shipped by Red Hat for use with OpenShift Container Platform, see OpenShift Operator Life Cycles.

Installing and configuring the Forklift Operator

You can install the Forklift Operator by using the OKD web console or the command-line interface (CLI).

In Forklift version 2.4 and later, the Forklift Operator includes the Forklift plugin for the OKD web console.

After you install the Forklift Operator by using either the OKD web console or the CLI, you can configure the Operator.

Installing the Forklift Operator by using the OKD web console

You can install the Forklift Operator by using the OKD web console.

-

OKD 4.20, 4.19, 4.18 installed.

-

KubeVirt Operator installed on an OpenShift migration target cluster.

-

You must be logged in as a user with

cluster-adminpermissions.

-

In the OKD web console, click Operators → OperatorHub.

-

Use the Filter by keyword field to search for forklift-operator.

The Forklift Operator is a Community Operator. Red Hat does not support Community Operators.

-

Click Migration Toolkit for Virtualization Operator and then click Install.

-

Click Create ForkliftController when the button becomes active.

-

Click Create.

Your ForkliftController appears in the list that is displayed.

-

Click Workloads → Pods to verify that the Forklift pods are running.

-

Click Operators → Installed Operators to verify that Migration Toolkit for Virtualization Operator appears in the konveyor-forklift project with the status Succeeded.

When the plugin is ready you will be prompted to reload the page. The Migration menu item is automatically added to the navigation bar, displayed on the left of the OKD web console.

Installing the Forklift Operator by using the command-line interface

You can install the Forklift Operator by using the command-line interface (CLI).

-

OKD 4.20, 4.19, 4.18 installed.

-

KubeVirt Operator installed on an OpenShift migration target cluster.

-

You must be logged in as a user with

cluster-adminpermissions.

-

Create the konveyor-forklift project:

$ cat << EOF | kubectl apply -f - apiVersion: project.openshift.io/v1 kind: Project metadata: name: konveyor-forklift EOF -

Create an

OperatorGroupCR calledmigration:$ cat << EOF | kubectl apply -f - apiVersion: operators.coreos.com/v1 kind: OperatorGroup metadata: name: migration namespace: konveyor-forklift spec: targetNamespaces: - konveyor-forklift EOF -

Create a

SubscriptionCR for the Operator:$ cat << EOF | kubectl apply -f - apiVersion: operators.coreos.com/v1alpha1 kind: Subscription metadata: name: forklift-operator namespace: konveyor-forklift spec: channel: development installPlanApproval: Automatic name: forklift-operator source: community-operators sourceNamespace: openshift-marketplace startingCSV: "konveyor-forklift-operator.2.10.0" EOF -

Create a

ForkliftControllerCR:$ cat << EOF | kubectl apply -f - apiVersion: forklift.konveyor.io/v1beta1 kind: ForkliftController metadata: name: forklift-controller namespace: konveyor-forklift spec: olm_managed: true EOF -

Verify that the Forklift pods are running:

$ kubectl get pods -n konveyor-forkliftThe following is example output:

ExampleNAME READY STATUS RESTARTS AGE forklift-api-bb45b8db4-cpzlg 1/1 Running 0 6m34s forklift-controller-7649db6845-zd25p 2/2 Running 0 6m38s forklift-must-gather-api-78fb4bcdf6-h2r4m 1/1 Running 0 6m28s forklift-operator-59c87cfbdc-pmkfc 1/1 Running 0 28m forklift-ui-plugin-5c5564f6d6-zpd85 1/1 Running 0 6m24s forklift-validation-7d84c74c6f-fj9xg 1/1 Running 0 6m30s forklift-volume-populator-controller-85d5cb64b6-mrlmc 1/1 Running 0 6m36s

Configuring the Forklift Operator

You can configure the following settings of the Forklift Operator by modifying the ForkliftController custom resource (CR), or in the Settings section of the Overview page, unless otherwise indicated.

-

Maximum number of virtual machines (VMs) or disks per plan that Forklift can migrate simultaneously.

-

How long

must gatherreports are retained before being automatically deleted (ForkliftControllerCR only). -

CPU limit allocated to the main controller container.

-

Memory limit allocated to the main controller container.

-

Interval at which a new snapshot is requested before initiating a warm migration.

-

Frequency with which the system checks the status of snapshot creation or removal during a warm migration.

-

Percentage of space in persistent volumes allocated as file system overhead when the

storageclassisfilesystem(ForkliftControllerCR only). -

Fixed amount of additional space allocated in persistent block volumes. This setting is applicable for any

storageclassthat is block-based (ForkliftControllerCR only). -

Configuration map of operating systems to preferences for vSphere source providers (

ForkliftControllerCR only). -

Configuration map of operating systems to preferences for oVirt (oVirt) source providers (

ForkliftControllerCR only). -

Whether to retain importer pods so that the Containerized Data Importer (CDI) does not delete them during migration (

ForkliftControllerCR only).

The procedure for configuring these settings by using the user interface is presented in Configuring MTV settings. The procedure for configuring these settings by modifying the ForkliftController CR is presented following.

-

Change a parameter’s value in the

specsection of theForkliftControllerCR by adding the parameter and value as follows:spec: parameter: value (1)1 Parameters that you can configure using the CLI are shown in the table that follows, along with a description of each parameter and its default value.

| Parameter | Description | Default value |

|---|---|---|

|

Varies with provider as follows:

|

|

|

The duration in hours for retaining |

|

|

The CPU limit allocated to the main controller container. |

|

|

The memory limit allocated to the main controller container. |

|

|

The interval in minutes at which a new snapshot is requested before initiating a warm migration. |

|

|

The frequency in seconds with which the system checks the status of snapshot creation or removal during a warm migration. |

|

|

Percentage of space in persistent volumes allocated as file system overhead when the

|

|

|

Fixed amount of additional space allocated in persistent block volumes. This setting is applicable for any

|

|

|

Config map for vSphere source providers. This config map maps the operating system of the incoming VM to a KubeVirt preference name. This config map needs to be in the namespace where the Forklift Operator is deployed. To see the list of preferences in your KubeVirt environment, open the OpenShift web console and click Virtualization > Preferences. Add values to the config map when this parameter has the default value,

|

|

|

Config map for oVirt source providers. This config map maps the operating system of the incoming VM to a KubeVirt preference name. This config map needs to be in the namespace where the Forklift Operator is deployed. To see the list of preferences in your KubeVirt environment, open the OpenShift web console and click Virtualization → Preferences. You can add values to the config map when this parameter has the default value,

|

|

|

Whether to retain importer pods so that the Containerized Data Importer (CDI) does not delete them during migration.

|

|

Configuring the controller_max_vm_inflight parameter

The value of controller_max_vm_inflight parameter, which is shown in the UI as Max concurrent virtual machine migrations, varies by the source provider of the migration

-

For all migrations except Open Virtual Appliance (OVA) or VMware migrations, the parameter specifies the maximum number of disks that Forklift can transfer simultaneously. In these migrations, Forklift migrates the disks in parallel. This means that if the combined number of disks that you want to migrate is greater than the value of the setting, additional disks must wait until the queue is free, without regard for whether a VM has finished migrating.

For example, if the value of the parameter is 15, and VM A has 5 disks, VM B has 5 disks, and VM C has 6 disks, all the disks except for the 16th disk start migrating at the same time. Once any of them has migrated, the 16th disk can be migrated, even though not all the disks on VM A and the disks on VM B have finished migrating.

-

For OVA migrations, the parameter specifies the maximum number of VMs that Forklift can migrate simultaneously, meaning that all additional disks must wait until at least one VM has been completely migrated.

For example, if the value of the parameter is 2, and VM A has 5 disks, VM B has 5 disks, and VM C has 6 disks, all the disks on VM C must wait to migrate until either all the disks on VM A or on VM B finish migrating.

-

For VMware migrations, the parameter has the following meanings:

-

Cold migration:

-

To local KubeVirt: VMs for each ESXi host that can migrate simultaneously.

-

To remote KubeVirt: Disks for each ESXi host that can migrate simultaneously.

-

-

Warm migration: Disks for each ESXi host that can migrate simultaneously.

-

Migrating virtual machines by using the OKD web console

Use the Forklift user interface to migrate virtual machines (VMs). It is located in the Virtualization section of the OKD web console.

The MTV user interface

The Forklift user interface is integrated into the OKD web console.

In the left panel, you can choose a page related to a component of the migration progress, for example, Providers. Or, if you are an administrator, you can choose Overview, which contains information about migrations and lets you configure Forklift settings.

In pages related to components, you can click on the Projects list, which is in the upper-left portion of the page, and see which projects (namespaces) you are allowed to work with.

The MTV Overview page

The Forklift Overview page displays system-wide information about migrations and a list of Settings you can change.

If you have Administrator privileges, you can access the Overview page by clicking Migration → Overview in the OKD web console.

The Overview page has 3 tabs:

-

Overview

-

YAML

-

Health

-

History

-

Settings

Overview tab

The Overview tab is to help you quickly create providers and find information about the whole system:

-

In the upper pane, is the Welcome section, which includes buttons that let you open the Create provider UI for each vendor (VMware, Open Virtual Appliance, OpenStack, oVirt, and KubeVirt). You can close this section by clicking the Options menu

in the upper-right corner and selecting Hide from view. You can reopen it by clicking Show the welcome card in the upper-right corner.

in the upper-right corner and selecting Hide from view. You can reopen it by clicking Show the welcome card in the upper-right corner. -

In the center-left pane is a "donut" chart named Virtual machines. This chart shows the number of running, failed, and successful virtual machine migrations that Forklift ran for the time interval that you select. You can choose a different interval by clicking the list in the upper-right corner of the pane. You can select a different interval by clicking the list. The options are: Last 24 hours, Last 10 days, Last 31 days, and All. By clicking on each division of the chart, you can navigate to the History tab for information about the migrations.

Data for this chart includes only the most recent run of a migration plan that was modified due to a failure. For example, if a plan with 3 VMs fails 4 times, then this chart shows that 3 VMs failed, not 12.

-

In the center-right pane is an area chart named Migration history. This chart shows the number of migrations that succeeded, failed, or were running during the interval shown in the title of the chart. You can choose a different interval by clicking the Options menu

in the upper-right corner of the pane. The options are: Last 24 hours, Last 10 days, and Last 31 days. By clicking on each division of the chart, you can navigate to the History tab for information about the migrations.

in the upper-right corner of the pane. The options are: Last 24 hours, Last 10 days, and Last 31 days. By clicking on each division of the chart, you can navigate to the History tab for information about the migrations. -

In the lower-left pane is a "donut" chart named Migration plans. This chart shows the current number of migration plans grouped by their status. This includes plans that were not started, cannot be started, are incomplete, archived, paused, or have an unknown status. By clicking the Show all plans link, you can quickly navigate to the Migration plans page.

Since a single migration might involve many virtual machines, the number of migrations performed using Forklift might vary significantly from the number of migrated virtual machines.

-

In the lower-right pane is a table named Forklift health. This table lists all of the Forklift pods. The most important one,

forklift-controller, is first. The remaining pods are listed in alphabetical order. The View all link opens the Health tab. The status and creation time of each pod are listed. There is also a link to the logs of each pod.

YAML tab

The YAML tab displays the ForkliftController custom resource (CR) that defines the operation of the Forklift Operator. You can modify the CR in this tab.

Health tab

The Health tab has two panes:

-

In the upper pane, there is a table named Health. It lists all the Forklift pods. The most important one,

forklift-controller, is first. The remaining pods are listed in alphabetical order. For each pod, the status, and creation time of the pod are listed, and there is a link to the logs of the pod. -

In the lower pane, there is a table named Conditions. It lists the following possible types (states) of the Forklift Operator, the status of the type, the last time the condition was updated, the reason for the update, and a message about the condition.

History tab

The History tab displays information about migrations.

-

In the upper-left of the page, there is a filter that you can use to display only migrations of a certain status, for example, Succeeded.

-

To the right of the filter is the Group by plan toggle switch, which lets you display either all migrations or view only the most recent migration run per plan within the specified time range.

Settings tab

The table that follows describes the settings that are visible in the Settings tab, their default values, and other possible values that can be set or chosen, if needed.

| Setting | Description | Default value | Additional values |

|---|---|---|---|

Maximum concurrent VM migrations |

Varies with provider as follows:

|

20. |

Adjustable by either using the + and - keys to set a different value or by clicking the textbox and entering a new value. |

Controller main container CPU limit |

The CPU limit that is allocated to the main controller container, in milliCPUs (m). |

500 m. |

Adjustable by selecting another value from the list. Options: 200 m, 500 m, 2000 m, 8000 m. |

Controller main container memory limit |

The memory limit that is allocated to the main controller container in mebibytes (Mi). |

800 Mi. |

Adjustable by selecting another value from the list. Options: 200 Mi, 800 Mi, 2000 Mi, 8000 Mi. |

Controller inventory container memory limit |

The memory limit that is allocated to the inventory controller container in mebibytes (Mi). |

1000 Mi. |

Adjustable by selecting another value from the list. Options: 400 Mi, 1000 Mi, 2000 Mi, 8000 Mi. |

Precopy internal (minutes) |

The interval in minutes at which a new snapshot is requested before initiating a warm migration. |

60 minutes. |

Adjustable by selecting another value from the list. Options: 5 minutes, 30 minutes, 60 minutes, 120 minutes. |

Snapshot polling interval |

The interval in seconds between which the system checks the status of snapshot creation or removal during a warm migration. |

10 seconds. |

Adjustable by choosing another value from the list. Options: 1 second, 5 seconds, 10 seconds, 60 seconds. |

Migrating virtual machines using the MTV user interface

Use the Forklift user interface to migrate VMs from the following providers:

-

VMware vSphere

-

oVirt (oVirt)

-

OpenStack

-

Open Virtual Appliances (OVAs) that were created by VMware vSphere

-

KubeVirt clusters

For all migrations, you specify the source provider, the destination provider, and the migration plan. The specific procedures vary per provider.

|

You must ensure that all Prerequisites and software requirements for all providers and all Specific software requirements for each provider are met. VMware only: You must have the minimal set of VMware privileges. VMware only: Creating a VMware Virtual Disk Development Kit (VDDK) image increases migration speed. |

Migrating virtual machines from VMware vSphere

Adding a VMware vSphere source provider

You can migrate VMware vSphere VMs from VMware vCenter or from a VMware ESX/ESXi server without going through vCenter.

|

EMS enforcement is disabled for migrations with VMware vSphere source providers in order to enable migrations from versions of vSphere that are supported by Forklift but do not comply with the 2023 FIPS requirements. Therefore, users should consider whether migrations from vSphere source providers risk their compliance with FIPS. Supported versions of vSphere are specified in Software compatibility guidelines. |

|

Anti-virus software can cause migrations to fail. It is strongly recommended to remove such software from source VMs before you start a migration. |

|

Forklift does not support migrating VMware Non-Volatile Memory Express (NVMe) disks. |

|

If you input any value of maximum transmission unit (MTU) besides the default value in your migration network, you must also input the same value in the OKD transfer network that you use. For more information about the OKD transfer network, see Creating a VMware vSphere migration plan using the Forklift wizard. |

-

It is strongly recommended to create a VMware Virtual Disk Development Kit (VDDK) image in a secure registry that is accessible to all clusters. A VDDK image accelerates migration and reduces the risk of a plan failing. If you are not using VDDK and a plan fails, retry with VDDK installed. For more information, see Creating a VDDK image.

|

Virtual machine (VM) migrations do not work without VDDK when a VM is backed by VMware vSAN. |

-

Access the Create provider page for VMware by doing one of the following:

-

In the OKD web console, click Migration for Virtualization > Providers.

-

Click Create Provider.

-

Select a Project from the list. The default project shown depends on the active project of Forklift.

If the active project is All projects, then the default project is

openshift-mtv. Otherwise, the default project is the same as the active project.If you have Administrator privileges, you can see all projects, otherwise, you can see only the projects you are authorized to work with.

-

Click VMware.

-

-

If you have Administrator privileges, in the OKD web console, click Migration for Virtualization > Overview.

-

In the Welcome pane, click VMware.

If the Welcome pane is not visible, click Show the welcome card in the upper-right corner of the page, and click VMware when the Welcome pane opens.

-

Select a Project from the list. The default project shown depends on the active project of Forklift.

If the active project is All projects, then the default project is

openshift-mtv. Otherwise, the default project is the same as the active project.If you have Administrator privileges, you can see all projects, otherwise, you can see only the projects you are authorized to work with.

-

-

-

Specify the following fields:

-

Provider details

-

Provider resource name: Name of the source provider.

-

Endpoint type: Select the vSphere provider endpoint type. Options: vCenter or ESXi. You can migrate virtual machines from vCenter, an ESX/ESXi server that is not managed by vCenter, or from an ESX/ESXi server that is managed by vCenter but does not go through vCenter.

-

URL: URL of the SDK endpoint of the vCenter on which the source VM is mounted. Ensure that the URL includes the

sdkpath, usually/sdk. For example,https://vCenter-host-example.com/sdk. If a certificate for FQDN is specified, the value of this field needs to match the FQDN in the certificate. -

VDDK init image:

VDDKInitImagepath. It is strongly recommended to create a VDDK init image to accelerate migrations. For more information, see Creating a VDDK image.Do one of the following:

-

Select the Skip VMWare Virtual Disk Development Kit (VDDK) SDK acceleration (not recommended).

-

Enter the path in the VDDK init image text box. Format:

<registry_route_or_server_path>/vddk:<tag>. -

Upload a VDDK archive and build a VDDK init image from the archive by doing the following:

-

Click Browse next to the VDDK init image archive text box, select the desired file, and click Select.

-

Click Upload.

The URL of the uploaded archive is displayed in the VDDK init image archive text box.

-

-

-

-

Provider credentials

-

Username: vCenter user or ESXi user. For example,

user@vsphere.local. -

Password: vCenter user password or ESXi user password.

-

-

-

Choose one of the following options for validating CA certificates:

-

Use a custom CA certificate: Migrate after validating a custom CA certificate.

-

Use the system CA certificate: Migrate after validating the system CA certificate.

-

Skip certificate validation : Migrate without validating a CA certificate.

-

To use a custom CA certificate, leave the Skip certificate validation switch toggled to left, and either drag the CA certificate to the text box or browse for it and click Select.

-

To use the system CA certificate, leave the Skip certificate validation switch toggled to the left, and leave the CA certificate text box empty.

-

To skip certificate validation, toggle the Skip certificate validation switch to the right.

-

-

-